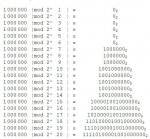

Okay I tried to convert 1 million to binary by dividing by a power of 2 and taking the remainder and dividing that by a power of 2 and so on and I got this:

1111010000100100000

Google says 1 million in binary is:

11110100001001000000

Why is mine with the modular arithmetic 19 bits and the google search for 1 million in binary coming up with 20 bits? Do they take it 1 step further to the half's place or something?

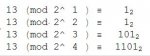

I mean modular arithmetic should work for converting any base 10 number to that same number in binary. How would it work for base 3 and larger though since you have more digits you can use in those bases.

1111010000100100000

Google says 1 million in binary is:

11110100001001000000

Why is mine with the modular arithmetic 19 bits and the google search for 1 million in binary coming up with 20 bits? Do they take it 1 step further to the half's place or something?

I mean modular arithmetic should work for converting any base 10 number to that same number in binary. How would it work for base 3 and larger though since you have more digits you can use in those bases.

Last edited: